Abstract

Recent advances in text-to-image generation with diffusion models present transformative capabilities in image quality. However, user controllability of the generated image, and fast adaptation to new tasks still remains an open challenge, currently mostly addressed by costly and long re-training and fine-tuning or ad-hoc adaptations to specific image generation tasks. In this work, we present MultiDiffusion, a unified framework that enables versatile and controllable image generation, using a pre-trained text-to-image diffusion model, without any further training or finetuning. At the center of our approach is a new generation process, based on an optimization task that binds together multiple diffusion generation processes with a shared set of parameters or constraints. We show that MultiDiffusion can be readily applied to generate high quality and diverse images that adhere to user-provided controls, such as desired aspect ratio (e.g., panorama), and spatial guiding signals, ranging from tight segmentation masks to bounding boxes.

Method

Our key idea is to define a new generation process over a pre-trained reference diffusion model. Starting from a noise image, at each generation step, we solve an optimization task whose objective is that each crop will follow as closely as possible its denoised version. |

|

Note that while each denoising step may pull to a different direction, our process fuses these inconsistent directions into a global denoising step, resulting in a high-quality seamless image. |

Applications

Text-to-Panorama generation

We demonstrate text-to-panorama results using MultiDiffusion, in resolution 512x4608.

Tight region-based generation

Our method can work with tight, accurate segmentation masks, if provided by the user. |

|

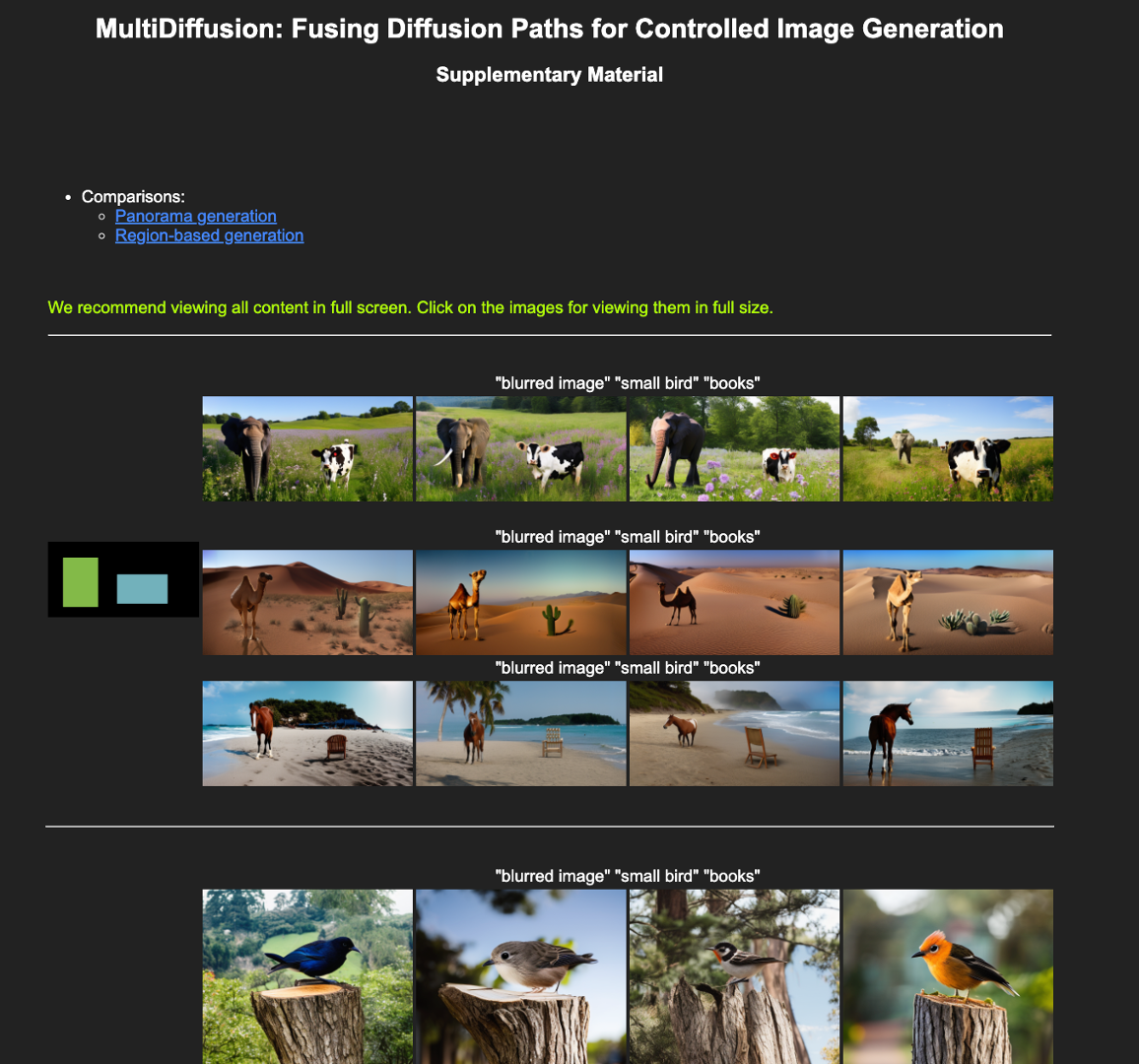

Rough region-based generation

Additionally, our method can work with rough masks that can intuitively be obtained by novice users. Note that we can also work at arbitrary aspect ratios, similarly to the panoramas. |

|

|

|

Paper

|

MultiDiffusion: Fusing Diffusion Paths for Controlled Image Generation

|

Supplementary Material

|

Bibtex